OVERVIEW

Why does Enterprise Information LLM Assistant matter to SMEs?

Enterprise information Large Language Models (LLMs) are transformative tools for organizations, enhancing customer interactions and extracting valuable insights from complex, unstructured data. Here’s how an enterprise information LLM can drive impact:

Customization and Specialization

Tailored to your business, enterprise LLMs adapt to specific needs, offering refined responses and accurate insights that align closely with your business goals.

Data Privacy and Security

Enterprise LLMs ensure that your business data remains secure and compliant with industry standards, protecting sensitive customer and business information.

Increased Operational Efficiency

Automate routine tasks and streamline workflows, allowing employees to focus on higher-value activities and improving overall productivity.

Enhanced Innovation

LLMs accelerate research and development by generating ideas, analyzing trends, and delivering insights, driving innovation across departments.

Proprietary Data Utilization

Leverage your organization's unique data assets to create a competitive advantage, transforming proprietary information into actionable insights.

Scalability and Control

Scale LLMs to match your organizational needs, whether handling large volumes of data or delivering custom responses. Retain control over deployment and configuration to suit evolving business requirements.

Enterprise ChatGPT

Microsoft Azure has integrated ChatGPT into its Azure OpenAI Service, offering an enterprise-focused solution that enables businesses to apply advanced AI models to their operations.

Microsoft has integrated ChatGPT into its Azure OpenAI Service, providing businesses with access to advanced AI models such as DALL·E 2, GPT-4, and Codex. This integration enables enterprises to enhance operations, drive innovation, and improve customer experiences by leveraging Azure's robust computing capabilities and security infrastructure.

Disadvantage: Too expensive for SMEs. Due to the cost of Enterprise ChatGPT, small and medium size organization can not afford to use this solution.

Enterprise LLM solution for SMEs organization

SMEs (small and medium size organization) needs an affordable Enterprise AI solution which can meet the following requirements.

Easy to use and operate:

organization can easily upload unstructured data. End-user can use it easily.

Affordable:

Less cost within the SMEs budget.

Accurate with reference link:

With the reference link, the organization can update the outdated data.

organization can easily upload unstructured data. End-user can use it easily.

Affordable:

Less cost within the SMEs budget.

Accurate with reference link:

With the reference link, the organization can update the outdated data.

TECHNOLOGY

Advanced Technologies Explained

The following technologies form the foundation of our Enterprise AI Solution:

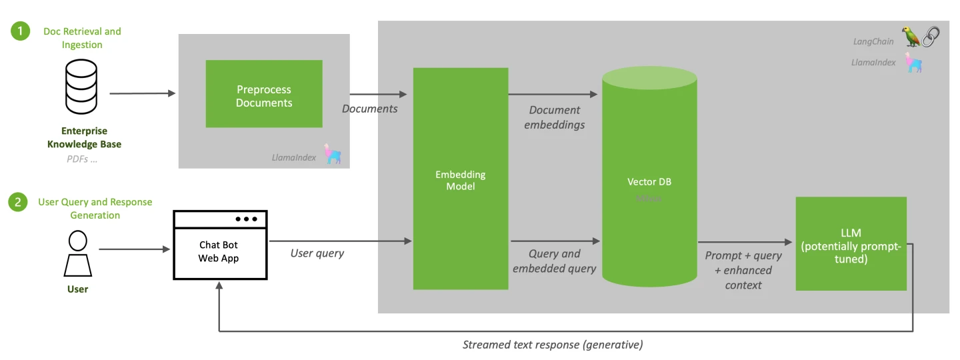

RAG (Retrieval-Augmented Generation)

RAG enhances the capabilities of large language models (LLMs) by allowing them to retrieve relevant information from external databases or knowledge sources, enriching responses with accurate, context-specific data.

LangChain

LangChain serves as a flexible interface that streamlines interactions with LLMs. It provides essential tools for prompt management, memory handling, indexing, and agent-based decision-making, simplifying complex AI workflows.

LLaMa 3

Known for its human-like text generation, LLaMa 3 models enable natural language interactions across a wide range of applications, making them ideal for engaging user interactions and nuanced query handling.

Fine-Tuning

Fine-tuning optimizes model performance, reducing memory usage and speeding up training processes, thereby lowering GPU requirements and enhancing efficiency.

SUMMARY

Solution Capabilities

The prompt flow employs the Retrieval-Augmented Generation (RAG) pattern to enhance query handling. It extracts the relevant query from the prompt, performs an AI search, and uses the results as grounding data for the foundation model. This approach ensures that the generated responses are accurate and well-informed, rooted in reliable, context-specific information.

Prompt Engineering

Adaptable Prompt Structure; Dynamic Prompts; Built-in Chain of Thought (COT).

Custom Document Chunking

Content extraction from text-based documents chunking and saving metadata into manageable sizes to be used in the RAG pattern.

Document Pre-Processing

Text-based: pdf, docx, html, htm, csv, md, pptx, txt, json, xlsx, xml, eml, msg.

AI Search Integration

Employs Vector Hybrid Search which combines vector similarity with keyword matching to enhance search accuracy full-text search, semantic search, vector search, and hybrid search.

CALL TO ACTION

Get Started with CubeDrive Today

The integration of LLaMa 3, Retrieval-Augmented Generation (RAG), and LangChain significantly enhances AI capabilities, enabling access to a wider knowledge base when generating responses. With this setup, a LLaMa 3-powered application can retrieve data from an external knowledge repository before crafting a response, resulting in more accurate and contextually relevant information. This approach is particularly valuable for enterprise solutions where the AI must deliver responses that are not only accurate but also specific to the organization’s context and the nature of each query.